Agentic AI Systems Explained- Architecture, Components & Real-World Use Cases

Introduction — Why Are Agentic AI Systems Important in 2026?

Artificial intelligence is no longer limited to answering questions or generating text. In 2026, the focus has shifted toward AI systems that can plan, decide, and act autonomously. This shift is what’s driving the rapid rise of agentic AI systems.

Traditional AI applications and chatbots respond to prompts. Agentic AI systems go further. They understand goals, break them into tasks, use tools, evaluate outcomes, and improve their behavior over time. This makes them far more useful for real-world business and enterprise problems.

Agentic AI systems are emerging now because three technologies have matured together:

- Large Language Models (LLMs) capable of reasoning

- Reliable tool integration (APIs, databases, code execution)

- Persistent memory and feedback loops

When combined, these elements allow AI to move from reactive assistance to autonomous execution.

Many organizations are already using agentic AI systems behind the scenes. Examples include

- Enterprise document analysis platforms

- Intelligent customer support automation

- Research and decision-support assistants

- Engineering and DevOps copilots

Yet, despite growing adoption, there is still confusion around what agentic AI systems actually are. Many people mix them up with chatbots, workflows, or simple automation tools.

This guide is designed to fix that.

In this article, you will learn

- What an agentic AI system really is (without hype)

- The core components that make these systems work

- How agentic AI systems differ from agentic workflows

- Where they are being used in real-world scenarios

- The challenges, skills, and future direction of agentic AI

This is an architecture-first explanation, not a coding tutorial. The goal is clarity — so you understand how these systems work before worrying about tools or frameworks.

What Is an Agentic AI System?

An agentic AI system is an artificial intelligence system designed to pursue goals autonomously, rather than simply responding to user inputs. Unlike traditional AI applications, an agentic system can decide what to do next, not just what to say next.

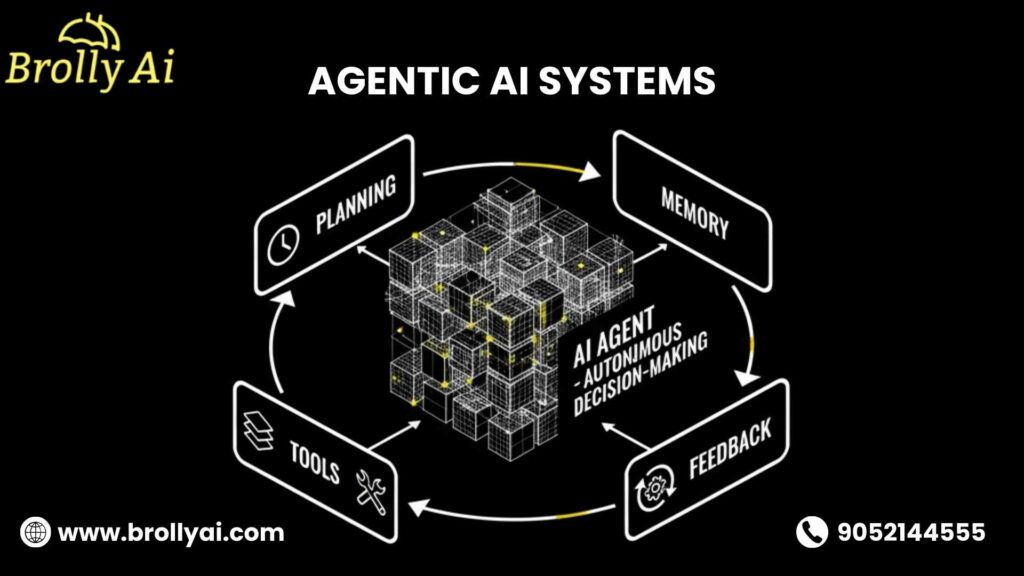

At its core, an agentic AI system combines reasoning, planning, memory, and action into a continuous loop. This allows the system to operate independently within defined boundaries.

What Makes an AI System “Agentic”?

The term agentic comes from the idea of agency — the ability to act with purpose.

An AI system is considered agentic when it demonstrates the following characteristics:

- Goal-driven behavior

The system works toward a defined objective instead of waiting for step-by-step instructions. - Autonomous decision-making

It chooses actions based on context, constraints, and past outcomes. - Tool usage and execution

The system can call APIs, query databases, or execute actions to move closer to its goal. - Feedback and adaptation

It evaluates results and adjusts its strategy when something doesn’t work.

These traits separate agentic AI systems from traditional, reactive AI applications.

How Is an Agentic AI System Different From a Traditional AI Application?

Most AI applications today are reactive. They take an input and generate an output.

Agentic AI systems are active. They set a goal and continuously decide how to achieve it.

Here’s a simple comparison

- A traditional AI chatbot answers questions when asked.

- An agentic AI system can identify what information is missing, retrieve it, validate results, and take follow-up actions — without being prompted at every step.

This shift from response generation to decision-making is the defining difference.

What Is the Simplest Way to Understand Agentic AI Systems?

A helpful mental model is to think of an agentic AI system as running a continuous loop:

Think → Plan → Act → Observe → Improve

- Think: Understand the goal and current context

- Plan: Break the goal into smaller tasks

- Act: Use tools or take actions

- Observe: Evaluate the outcome

- Improve: Adjust the plan based on feedback

Another way to look at this difference is

- A chatbot is like an assistant who waits for instructions.

- An agentic AI system is like an employee who understands the objective and determines the best approach to achieve it.

This mental model will be useful as we explore the architecture and components of agentic AI systems in the next section.

What Are the Core Components of an Agentic AI System?

An agentic AI system is not just a single model or tool. It is a system-level architecture comprising multiple components that work together. Understanding these components is essential if you want to design, evaluate, or work with agentic AI systems in practice.

Below are the core building blocks that turn an AI model into an autonomous, goal-driven system.

The AI Agent: What Does It Actually Do?

The AI agent is the central decision-maker in the system. It is responsible for owning the goal and deciding how to achieve it.

Unlike a standard LLM prompt-response setup, the agent:

- Interprets the goal or objective

- Decides which actions to take

- Chooses which tools to use

- Evaluates outcomes and adjusts behavior

The agent does not just generate text. It decides what should happen next.

In simple terms

- The LLM provides reasoning capability.

- The agent wraps that reasoning in decision logic.

This distinction is critical. Without an agent layer, you only have a smart text generator — not an autonomous system.

Planner and Reasoning Layer: How Are Goals Broken Into Actions?

Agentic AI systems rely on a planner and reasoning layer to turn high-level goals into executable steps.

This layer handles

- Goal decomposition

Breaking a high-level objective into smaller, achievable tasks. - Task sequencing

Deciding the correct order of actions. - Reflection and retries

Detecting failures and attempting alternative approaches.

For example, if the goal is to analyze a report, the planner may decide to:

- Locate the document

- Extract relevant sections

- Summarize findings

- Validate accuracy

This reasoning layer allows the agent to operate even when the path to the goal is not obvious upfront.

Memory Systems: How Do Agentic AI Systems Remember Context?

Memory is what allows an agentic AI system to behave consistently over time.

There are typically two types of memory

- Short-term memory

Holds the current task context and recent interactions. - Long-term memory

Stores historical information, preferences, and learned patterns.

Most modern agentic systems use vector databases to store long-term memory. This enables

- Semantic search

- Context recall across sessions

- Learning from previous outcomes

Without memory, an agent would repeat mistakes and lose continuity. Memory is what makes agentic behavior feel intentional and persistent.

Tools and Actions: How Do Agentic AI Systems Interact With the Real World?

An agentic AI system becomes useful only when it can take actions.

Tools are the bridge between reasoning and execution. Common tools include:

- APIs (internal and external)

- Databases and search systems

- Code execution environments

- Enterprise software tools

The agent decides

- Which tool to use

- What input to send

- How to interpret the output

This ability to act — not just respond — is what enables real-world automation.

Environment, Constraints, and Safety: What Keeps Agents Under Control?

Autonomy without boundaries is risky. That’s why every agentic AI system operates within a defined environment.

This includes

- Permission levels

- Allowed tools and actions

- Data access rules

- Compliance and safety constraints

These constraints ensure the agent

- Cannot access unauthorized data

- Cannot perform unsafe actions

- Operates within business and legal limits

Well-designed constraints are a sign of mature, production-ready systems.

Feedback and Evaluation: How Do Agentic AI Systems Improve Over Time?

Agentic AI systems continuously evaluate their own actions.

This feedback loop includes

- Validating tool outputs

- Checking if goals were met

- Identifying errors or inefficiencies

Some systems also include human-in-the-loop feedback for critical decisions, especially in enterprise environments.

Feedback enables

- Self-correction

- Performance improvement

- Safer long-running behavior

Without evaluation, autonomy quickly becomes unpredictability.

Quick Summary: Core Components at a Glance

- AI Agent: Owns the goal and makes decisions

- Planner & Reasoning Layer: Breaks goals into actions

- Memory Systems: Preserve context and learning

- Tools & Actions: Enable real-world execution

- Environment & Constraints: Ensure safety and control

- Feedback Loop: Drives improvement and reliability

Together, these components form the foundation of every agentic AI system.

Agentic AI Systems vs Agentic Workflows — What’s the Difference?

One of the most common sources of confusion in this space is the difference between agentic AI systems and agentic workflows. The terms are often used interchangeably, but they refer to very different concepts.

Understanding this distinction is critical for

- Choosing the right architecture

- Avoiding overengineering

- Preventing content and SEO cannibalization

Are Agentic AI Systems and Agentic Workflows the Same Thing?

No.

They operate at different levels.

- Agentic AI systems focus on system-level autonomy and architecture.

- Agentic workflows focus on task execution and orchestration.

An easy way to think about it

- A system defines capabilities and intelligence.

- A workflow defines steps and execution order.

Key Differences Between Agentic AI Systems and Agentic Workflows

Aspect | Agentic AI Systems | Agentic Workflows |

Scope | System-level design | Task-level execution |

Focus | Architecture & autonomy | Process orchestration |

Adaptability | Dynamic and context-aware | Mostly predefined |

Decision-making | Continuous and autonomous | Limited to flow logic |

Audience | Architects, senior engineers | Developers, builders |

Example | Enterprise AI assistant | Research → summarize flow |

This table is often picked up by featured snippets because it clearly answers a comparison query.

Why This Difference Matters in Practice

If your problem involves

- Persistent goals

- Changing conditions

- Long-running decisions

You need an agentic AI system.

If your problem involves

- A known sequence of steps

- Predictable execution

- Minimal decision-making

An agentic workflow is usually sufficient.

Many real-world applications combine both

- The system provides intelligence and autonomy.

- The workflow handles the execution of specific tasks.

How Agentic Systems and Workflows Work Together

In practice

- An agentic AI system decides what needs to be done.

- An agentic workflow executes how it gets done.

For example

- The agent identifies the need to analyze customer feedback.

- The workflow fetches data, summarizes it, and stores results.

This separation improves scalability, safety, and maintainability.

“To understand execution logic in depth, see our guide on Agentic Workflows.”

Do Agentic AI Systems Use Single or Multiple Agents?

Agentic AI systems can be designed using a single agent or multiple cooperating agents. The right choice depends on the complexity of the problem, the level of autonomy required, and operational constraints like cost and safety.

This section explains both approaches without going into unnecessary technical depth.

What Is a Single-Agent AI System?

A single-agent AI system uses one central agent to handle reasoning, planning, and decision-making.

In this setup

- One agent owns the goal

- All tools and memory connect to that agent

- Decisions flow through a single reasoning process

Advantages of single-agent systems:

- Easier to design and debug

- Lower infrastructure and compute cost

- Stronger control and predictability

Because of these benefits, most early-stage and internal agentic systems start with a single-agent architecture.

When Are Single-Agent Systems Enough?

Single-agent systems work well when

- The problem domain is narrow

- Decisions are relatively linear

- Safety and oversight are high priorities

Common examples include

- Internal enterprise assistants

- Document analysis tools

- Simple operations automation

For many real-world use cases, adding more agents does not improve outcomes — it only adds complexity.

When Do Multi-Agent Systems Make Sense?

A multi-agent AI system uses multiple specialized agents that work together.

Each agent may

- Handle a specific role or expertise

- Operate with its own memory or tools

- Coordinate or negotiate with other agents

Multi-agent systems are useful when

- Problems are complex and multi-dimensional

- Tasks require different types of expertise

- Work can be parallelized

Typical scenarios include

- Research and analysis platforms

- Enterprise-wide automation

- Large-scale decision support systems

Trade-Offs Between Single-Agent and Multi-Agent Systems

While multi-agent systems are powerful, they introduce challenges:

- Higher coordination complexity

- Increased cost and latency

- More difficult debugging and observability

That’s why most production systems follow this pattern:

- Start with a single agent

- Add more agents only when necessary

The goal is effective autonomy, not maximum complexity.

Key Takeaway

- Single-agent systems are simpler, cheaper, and easier to control

- Multi-agent systems enable specialization and scale

- The best architecture depends on the problem, not the hype

Where Are Agentic AI Systems Used in Real-World Applications?

One of the strongest indicators that agentic AI systems are more than a trend is their real-world adoption. Many organizations are already using these systems in production — often quietly — to handle complex, goal-driven tasks that traditional AI tools struggle with.

Below are some of the most practical and realistic use cases of agentic AI systems today.

Enterprise Document Intelligence

Large organizations deal with massive volumes of documents such as contracts, policies, and compliance reports. Agentic AI systems help by:

- Identifying relevant documents

- Extracting key information

- Cross-checking clauses and rules

- Flagging inconsistencies or risks

Unlike simple document summarization tools, agentic systems can decide what to look for next based on what they find.

This makes them especially useful in legal, compliance, and regulatory environments.

Customer Support and Operations Automation

Agentic AI systems are increasingly used to improve customer support workflows.

They can

- Analyze incoming tickets

- Classify urgency and intent

- Retrieve relevant information

- Propose or execute resolutions

Instead of handling one message at a time, agentic systems manage the entire resolution process, escalating to humans only when needed.

Research and Knowledge Work

Research tasks often involve open-ended exploration rather than fixed steps.

Agentic AI systems excel here by

- Formulating research questions

- Gathering information from multiple sources

- Evaluating credibility

- Synthesizing insights into structured outputs

This makes them valuable for:

- Market research

- Competitive intelligence

- Policy and trend analysis

Engineering and DevOps Assistance

In engineering environments, agentic AI systems support teams by:

- Investigating incidents

- Analyzing logs and metrics

- Identifying root causes

- Suggesting remediation steps

These systems don’t replace engineers. Instead, they reduce cognitive load and speed up decision-making during high-pressure situations.

Financial Risk and Decision Support

Financial teams use agentic AI systems for:

- Monitoring risk indicators

- Evaluating scenarios

- Detecting anomalies

- Supporting decision-making

Because these systems operate under strict constraints and oversight, they fit well into regulated environments where explainability and control matter.

Why These Use Cases Matter

Across all these examples, one pattern is clear:

Agentic AI systems are most valuable when

- Tasks are goal-oriented, not step-based

- Conditions change over time

- Decisions require context and evaluation

This is where traditional automation falls short — and where agentic systems deliver real impact.

What Are the Biggest Challenges in Building Agentic AI Systems?

While agentic AI systems offer powerful capabilities, they also introduce new technical and operational challenges. Understanding these challenges is essential for anyone evaluating or building such systems in real-world environments.

This section reflects the practical realities teams face in production.

How Do Hallucinations Impact Agentic AI Systems?

Hallucinations are risky in any AI system, but they are more dangerous in agentic systems because agents can take actions based on incorrect reasoning.

Common risks include

- Acting on false assumptions

- Using incorrect data to make decisions

- Triggering unnecessary or harmful actions

Because agentic systems operate autonomously, hallucinations can cascade into larger failures if not properly controlled.

Why Is Tool Misuse a Major Risk?

Agentic AI systems rely on tools to interact with the real world. If an agent misuses a tool, the impact can be immediate.

Examples of tool misuse include:

- Calling the wrong API

- Passing incorrect parameters

- Executing actions without proper validation

To mitigate this, production systems often include:

- Strict tool schemas

- Validation layers

- Permission-based access controls

How Do Teams Manage Cost and Latency?

Agentic AI systems tend to be long-running and resource-intensive.

Challenges include

- High token usage from repeated reasoning

- Latency from multiple tool calls

- Increased infrastructure costs

Without careful monitoring, costs can scale quickly. This is why cost control is a core architectural concern — not an afterthought.

Why Is Observability and Debugging So Difficult?

Debugging agentic AI systems is harder than debugging traditional software.

Reasons include

- Non-deterministic behavior

- Hidden reasoning steps

- Complex decision paths

Teams need strong observability tools to:

- Trace decisions

- Monitor agent behavior

- Identify failure patterns

Observability is critical for trust and reliability.

What Security and Permission Issues Matter Most?

Autonomous systems raise serious security concerns.

Key issues include

- Unauthorized data access

- Excessive permissions

- Unintended actions

Enterprise-grade agentic systems enforce:

- Principle of least privilege

- Audit logs

- Clear action boundaries

Security and safety are what separate experiments from production-ready systems.

Key Takeaway

Building agentic AI systems is not just about making agents smarter. It’s about making them safe, reliable, observable, and cost-effective.

These challenges explain why successful agentic systems are designed carefully — with architecture and governance at the center.

How Are Agentic AI Systems Designed in Practice? (High-Level Architecture)

Agentic AI systems are built using a layered architecture. While implementations vary across organizations, most production systems follow a similar high-level design pattern.

This section explains that architecture without code or framework-specific details, focusing on how the pieces fit together.

LLM as the Reasoning Core

At the center of most agentic AI systems is a large language model (LLM).

The LLM is responsible for

- Understanding goals and context

- Reasoning through decisions

- Interpreting tool outputs

However, the LLM alone is not the system. It acts as the reasoning engine, not the controller.

This distinction is important. Treating the LLM as a component — rather than the entire solution — leads to more stable and controllable systems.

Orchestration and State Management Layer

The orchestration layer manages how the agent operates over time.

It handles

- Task sequencing

- State tracking

- Decision checkpoints

This layer ensures the agent

- Knows what has already been done

- Avoids repeating actions

- Can pause, resume, or stop safely

Without orchestration, agent behavior becomes chaotic and hard to manage.

Memory and Retrieval Layer

Memory allows agentic AI systems to retain context beyond a single interaction.

This layer typically includes

- Short-term working memory

- Long-term memory is stored in vector databases

The retrieval layer ensures the agent

- Recalls relevant past information

- Ground decisions in stored knowledge

- Maintains continuity across sessions

Memory turns isolated decisions into coherent behavior.

Tool Integration and Validation Layer

Tools are how agents interact with external systems.

This layer manages

- Tool definitions and schemas

- Input and output validation

- Error handling

Well-designed tool layers prevent:

- Unsafe actions

- Invalid calls

- Silent failures

Validation is critical because tools affect real systems and data.

Monitoring, Evaluation, and Guardrails

Production-grade agentic AI systems include continuous monitoring.

This includes

- Performance metrics

- Cost tracking

- Safety and compliance checks

Guardrails ensure

- The agent stays within the allowed boundaries

- Failures are detected early

- Human intervention is possible when needed

Monitoring turns autonomy into something you can trust.

Architectural Takeaway

Agentic AI systems succeed when

- Reasoning is separated from control

- Actions are validated

- Memory is intentional

- Monitoring is built in from the start

This architecture-first mindset is what enables safe, scalable deployment.

What Skills Are Needed to Work on Agentic AI Systems?

Working with agentic AI systems requires a slightly different skill set than building traditional AI applications. Because these systems are architecture-heavy and autonomy-driven, success depends as much on system thinking as it does on models.

Below are the core skills that matter most in 2026.

Understanding Large Language Model (LLM) Fundamentals

You don’t need to train models from scratch, but you do need to understand:

- How LLMs reason and generate outputs

- Strengths and limitations of LLM-based reasoning

- Common failure modes like hallucinations

This knowledge helps you design systems that work with the model’s strengths instead of fighting its weaknesses.

Prompt Engineering for Reasoning and Control

Prompting in agentic systems goes beyond simple instructions.

Important skills include

- Designing prompts for planning and reflection

- Structuring system prompts for safety and consistency

- Controlling tone, scope, and decision boundaries

Good prompts act like soft constraints that guide agent behavior.

Retrieval-Augmented Generation (RAG) and Vector Databases

Most agentic AI systems rely on external knowledge.

You should understand

- How vector databases store semantic memory

- When to retrieve information vs reason from context

- How retrieval improves accuracy and grounding

RAG is often what separates reliable systems from brittle ones.

System Design Thinking

This is one of the most critical skills.

System design thinking includes

- Breaking problems into components

- Defining responsibilities between layers

- Managing trade-offs between autonomy, safety, and cost

Agentic AI systems are systems first, models second.

Evaluation, Monitoring, and Observability

Because agentic systems make decisions autonomously, evaluation is essential.

Key skills include

- Designing evaluation metrics

- Monitoring agent behavior over time

- Detecting failures early

These skills are increasingly valued in production AI roles.

Learning Path Note

Many of these skills are now taught together in structured Generative AI programs, where system design, LLMs, and evaluation are covered as a single discipline.

Generative AI Training in Hyderabad

Key Takeaway

To work effectively with agentic AI systems, you need to:

- Think in systems

- Design for safety and control

- Focus on evaluation, not just output quality

These skills apply equally to engineers, architects, and AI product teams.

What Is the Future of Agentic AI Systems?

Agentic AI systems are still evolving, but their future direction is becoming clearer. Instead of dramatic or speculative changes, the next phase is about controlled, enterprise-ready adoption.

The focus is shifting from experimentation to reliability, safety, and real business value.

Will Enterprises Fully Adopt Agentic AI Systems?

Enterprise adoption is already happening, but cautiously.

Most organizations are

- Starting with internal-facing systems

- Limiting agent autonomy

- Adding strong guardrails and monitoring

Instead of replacing humans, agentic AI systems are designed to support decision-making and assist with execution. This gradual approach reduces risk while delivering measurable benefits.

Are Multi-Agent “Swarms” Becoming Practical?

Multi-agent systems will continue to grow, but only in specific, high-value scenarios.

Expect to see

- Small groups of specialized agents

- Clearly defined roles and boundaries

- Strong coordination rules

Large, uncontrolled agent swarms are unlikely to become mainstream due to cost, complexity, and safety concerns.

How Will Regulation and AI Safety Shape Agentic Systems?

Regulation will play a major role in shaping how agentic AI systems are deployed.

Key trends include

- Stronger auditability requirements

- Clear accountability for AI actions

- Mandatory safety and compliance checks

These requirements favor well-architected systems over quick experiments.

What Will Human–AI Collaboration Look Like?

The future of agentic AI is collaborative, not autonomous in isolation.

Humans will

- Define goals and constraints

- Review critical decisions

- Handle exceptions

Agentic AI systems will

- Execute routine and complex tasks

- Provide recommendations and insights

- Reduce cognitive and operational load

This balance ensures trust, safety, and long-term adoption.

Future Takeaway

The future of agentic AI systems is not about replacing humans. It’s about building reliable AI collaborators that operate within clear boundaries and deliver consistent value.

Conclusion — Why Agentic AI Systems Matter More Than Ever

Agentic AI systems represent a fundamental shift in how artificial intelligence is designed and used. Instead of reacting to individual prompts, these systems can understand goals, plan actions, use tools, evaluate outcomes, and improve over time. This makes them far better suited for real-world, enterprise-scale problems.

What truly sets agentic AI systems apart is architecture. The most successful implementations are not built by simply adding tools to an LLM. They are carefully designed systems with clear components, strong constraints, reliable memory, and continuous monitoring. This is what turns autonomy into something safe, scalable, and trustworthy.

As adoption grows, one thing is clear

- Chatbots and simple automation are no longer enough

- Understanding system-level design is now a core AI skill

- Agentic AI systems will increasingly support human decision-making across industries

Whether you are an engineer, architect, AI learner, or business leader, understanding how agentic AI systems work gives you a long-term advantage. It helps you ask better questions, design better solutions, and avoid costly mistakes driven by hype.

If you want to work with agentic AI systems effectively

- Start by mastering the core architecture, not tools

- Focus on safety, evaluation, and observability

- Learn how autonomy and control work together

Agentic AI is not about building smarter models.

It’s about building better systems.

The sooner you understand that shift, the better prepared you’ll be for the future of AI.

FAQS

An agentic AI system is an AI system that can pursue goals autonomously by planning, taking actions, using tools, and learning from feedback instead of only responding to prompts.

Chatbots respond to user inputs, while agentic AI systems decide what actions to take to achieve a goal, often without continuous human input.

Yes. Many enterprises use agentic AI systems for document analysis, customer support automation, research assistance, and internal decision support.

An AI system is agentic when it shows goal-driven behavior, autonomy, tool usage, memory, and feedback-based improvement.

No. Many production systems use a single agent. Multi-agent systems are used only when tasks require specialization or coordination.

Agentic workflows focus on executing predefined steps, while agentic AI systems focus on autonomous decision-making and system-level architecture.

LLMs act as the reasoning engine, helping agents understand goals, plan actions, and interpret results—but they are not the entire system.

They use tools like APIs, databases, and code execution to take real-world actions, validate results, and move closer to their goals.

Memory allows agents to retain context, learn from past actions, and maintain consistency across tasks and sessions.

Key risks include hallucinations, tool misuse, security issues, high costs, and limited observability if systems are poorly designed.

They use feedback loops, retries, validation layers, and sometimes human-in-the-loop oversight to detect and correct errors.

Yes, when designed with strong constraints, permissions, monitoring, and auditability. Safety depends on architecture, not autonomy alone.

No. They are designed to assist humans by handling complex or repetitive tasks, not to replace human judgment or accountability.

Industries like enterprise IT, finance, customer support, research, legal, and operations benefit the most from agentic AI systems.

Costs are managed through orchestration, limiting agent runtime, controlling tool usage, and monitoring token and compute consumption.

Key skills include LLM fundamentals, prompt engineering, system design, RAG, vector databases, and evaluation and monitoring.

Not exactly. Agentic AI systems operate autonomously within defined constraints, while fully autonomous AI implies unrestricted independence.

Most production systems include human oversight for critical actions, especially in regulated or high-risk environments.

Traditional automation follows fixed rules, while agentic AI systems adapt, reason, and decide actions dynamically based on context.

Their biggest advantage is the ability to handle complex, goal-driven tasks that require reasoning, adaptation, and real-world interaction.