Prompt Engineering Course in Hyderabad

with

100% Placements Assistance

- Class Room |

- 2 - 3 Months |

- Online |

- Capstone Project

Table of Contents

TogglePrompt Engineering Course in Hyderabad

Batch Details

| Trainers Name | Madhu, Dr.Prasad |

| Trainers Experience | 12+ Years, 20+ Years |

| Next Batch Date | 07th JULY 2025(Offline) 14th JULY 2025(Online) |

| Training Modes | Online Training and Offline Training |

| Course Duration | 3 Months(Online&Offline) |

| Call us at | +91 9052144555 |

| Email Us at | brollyai.com@gmail.com |

| Demo Class Details | Click here to chat on WhatsApp |

Prompt Engineering Course Curriculum

- Overview of Prompt Engineering

- Importance in AI and Natural Language Processing (NLP)

- Historical Context and Evolution

Basics of Python Programming

Installation and Setup:

● Installing Python and setting up a development environment (IDEs

like PyCharm, VSCode, Jupyter Notebooks)

Syntax and Basic Constructs:

● Variables and data types (integers, floats, strings, booleans)

● Basic input and output

● Comments and documentation

Control Structures

Conditional Statements:

● if, elif, else

Loops:

● for, while

● Loop control statements (break, continue, pass)

Functions

Defining Functions:

● Parameters and return values

Scope and Lifetime:

● Local and global variables

Lambda Functions:

● Anonymous functions

Core Data Structures

Lists:

● Creating, accessing, modifying, and iterating over lists

● List comprehensions

Tuples:

● Creating and using tuples

● Unpacking tuples

Sets:

● Creating and using sets

● Set operations (union, intersection, difference)

Dictionaries:

● Creating and using dictionaries

● Dictionary methods and comprehensions

Advanced Data Structures

Collections Module:

● defaultdict, Counter, OrderedDict, deque

File Operations

Reading and Writing Files:

● Opening, reading, writing, and closing files

● Working with different file modes (r, w, a, rb, wb)

Working with CSV and JSON:

● Reading from and writing to CSV and JSON files using csv and json

modules

OOP Basics

Classes and Objects:

● Defining classes and creating objects

● Instance variables and methods

Class Variables and Methods:

● Using class variables and class methods

Inheritance:

● Single and multiple inheritance

Polymorphism and Encapsulation:

● Method overriding

● Private variables and name mangling

Modular Programming

Creating and Importing Modules:

● Defining and using modules

Packages:

● Organizing code into packages

● Importing from packages

Error Handling

Exception Types:

● Common exceptions (ValueError, TypeError, etc.,)

Try, Except Blocks:

● Using try, except, else, and finally

Custom Exceptions:

● Creating and raising custom exceptions

Debugging

Debugging Techniques:

● Using print statements and logging

● Using debuggers (pdb)

Decorators and Context Managers

Decorators:

● Function decorators

● Class decorators

Context Managers:

● Using with statement

● Creating custom context managers with __enter__ and __exit__

Iterators and Generators

Iterators:

● Creating iterators using __iter__ and __next__

Generators:

● Creating generators with yield

● Generator expressions

NumPy:

● Arrays and matrix operations

Pandas:

● DataFrames for data manipulation

● Reading and writing data (CSV, Excel)

Data Visualization

Matplotlib:

● Plotting graphs and charts

Seaborn:

● Statistical data visualization

Unit Testing

Unittest Framework:

● Writing and running tests

● Test fixtures and test suites

Pytest:

● Advanced testing with pytest

Industry-Standard Tools

Get Practical Skills with Prompt Engineering Tools

Prompt Engineering Course in Hyderabad

Key Points

Comprehensive Curriculum

Industry-Relevant Content

Experienced Instructors

Hands-On Projects

Placement Assistance

Flexible Learning Options

Networking Opportunities

Students at Brolly Ai have access to networking opportunities with industry professionals, developing connections that can be valuable for career growth and development.

Continuous Learning Support

Certification Programs

Once you finish the Prompt Engineering training, the institute gives you course completion certificate. This certificate shows the employer’s that you are excel in that particular field. This helps you find more job opportunities.

What is Prompt Engineering?

- Prompt Engineering is about creating clear instructions for artificial intelligence systems to generate specific and desired outputs.

- Prompt Engineers essentially work on improve the communication between humans and Ai systems, making interactions more effective and suitable to user needs.

- Prompt Engineering involves designing queries or requests that guide Ai models to provide accurate and relevant information or creative content.

- The goal of Prompt Engineering is to make AI models easier to understand and control and making them more user-friendly and aligned with specific tasks or goals.

- In Prompt Engineering, the focus is to create input prompts that help AI algorithms produce best possible results.This involves carefully designing the words and phrases you use when giving instructions to the AI.

- It plays a crucial role in adjusting language models, to make sure they produce desired responses in various applications like chatbots, content creation, and data analysis.

Pre-Requisites of Prompt Engineering Course

- A fundamental understanding of programming languages, such as Python, is often needed for Prompt Engineering Training in Hyderabad.

- Having a basic knowledge of artificial intelligence concepts, including machine learning fundamentals, sets a solid foundation for individuals pursuing Prompt Engineering training.

- Familiarity with the basics of Natural Language Processing is beneficial, as Prompt Engineering often involves working with language models and text-based inputs.

- Proficiency in problem-solving, critical thinking, and logical reasoning, as these skills are crucial for effective prompt design and optimization.

Prompt Engineering Training in hyderabad

Course Outline

01

The course begins with an introduction to Prompt Engineering, providing a foundational understanding of its importance and applications in artificial intelligence.

02

Students will learn into programming basics, with a focus on Python, to give them with the necessary skills for implementing prompt engineering techniques.

03

The course teaches important ideas in artificial intelligence, making sure participants understand machine learning basics and algorithms well.

04

The course includes a special module on Natural Language Processing (NLP), focusing on how language models and text processing are used in prompt design.

05

The course includes practical labs and simulations, letting students use what they learn in a real-time projects this will increase the students career opportunities.

06

The course then moves on to more advanced subjects, teaching techniques for making prompt inputs better and improving the performance of AI models.

07

In our prompt engineering course internships are offered to students this will benefit for them to use prompt engineering skills in real life situations.

08

The course concludes with a big project, where students to showcase their proficiency in prompt engineering and its applications in different AI situations.

09

The course offers continuous support and mentorship, ensuring students receive guidance and feedback throughout their learning journey.

Prompt Engineering Course in Hyderabad

Course Overview

Brolly Ai‘s Prompt Engineering course in Hyderabad is designed to prepare learners with the essential skills needed to effectively communicate with and optimize artificial intelligence systems. The course begins with a detailed introduction to Prompt Engineering, highlighting its importance in influencing of Ai models. Students will learn into the fundamentals of programming, focusing on Python, to build a strong foundation for implementing prompt engineering techniques.

As the course progresses, participants will explore key concepts in artificial intelligence, with a specific focus on machine learning principles and algorithms. A dedicated module on Natural Language Processing (NLP) ensures that learners gain understanding into the role of language models and text processing in creating exact and effective prompts. Brolly Ai Institute’s Prompt Engineering course not only provides theoretical knowledge but also provides practical experience, preparing students for effective contributions in the dynamic field of Ai.

Modes Of Training

Classroom Training

- Industry Expert Trainers

- Hands-on Industry Projects

- Group Discussions

- one-one Mentorship

- Covers Advanced Topics

Online Training

- Virtual Learning Sessions

- Interactive Webinars

- Digital Learning Modules

- Online Practical Labs

- Flexible Learning Schedules

Corporate Training

- Customized Training Programs

- Interactive Team Development

- Industry-Relevant Content

- On-Site Workshops

- Customized Learning Paths

Prompt Engineering Jobs

01

Prompt Engineer: The Prompt Engineer primary role involves designing and improving prompts for Ai systems, ensuring they generate accurate and relevant outputs based on user queries.

02

AI Language Model Tuner: Responsible for optimizing language models by adjusting prompt inputs, optimizing model parameters, and enhancing overall performance.

03

Chatbot Developer: Specializing in creating prompts for chatbots, these professionals create conversational experiences by guiding the Ai in understanding and responding to user messages effectively.

04

Content Generation Specialist: Content Generation Specialist Focused on using prompt engineering to generate creative and informative content, such as articles, summaries, or product descriptions, utilising Ai capabilities.

05

Data Analyst with Prompt Engineering Skills: Integrating prompt engineering techniques into data analysis tasks, these professionals optimize queries to acquire meaningful knowledge from large datasets.

06

Virtual Assistant Designer: Creating prompts for virtual assistants to improve user interactions , making the AI assistants more responsive and suited to users’ specific needs.

07

AI Product Manager: Managing the prompt design for AI products to meet user expectations and working with developers to improve how the products work.

08

User Experience (UX) Specialist with AI Focus: Using prompt engineering principles into the design of user platform to create AI-powered apps easy and smooth to use.

09

AI Researcher – Prompt Studies: AI Researcher Conducting research on prompt engineering methodologies, contributing to advancements in the field and developing best practices for effective prompt design.

10

Educator in AI and Prompt Engineering: Sharing knowledge and skills in prompt engineering through teaching and training, preparing the next generation of professionals in this developing field.

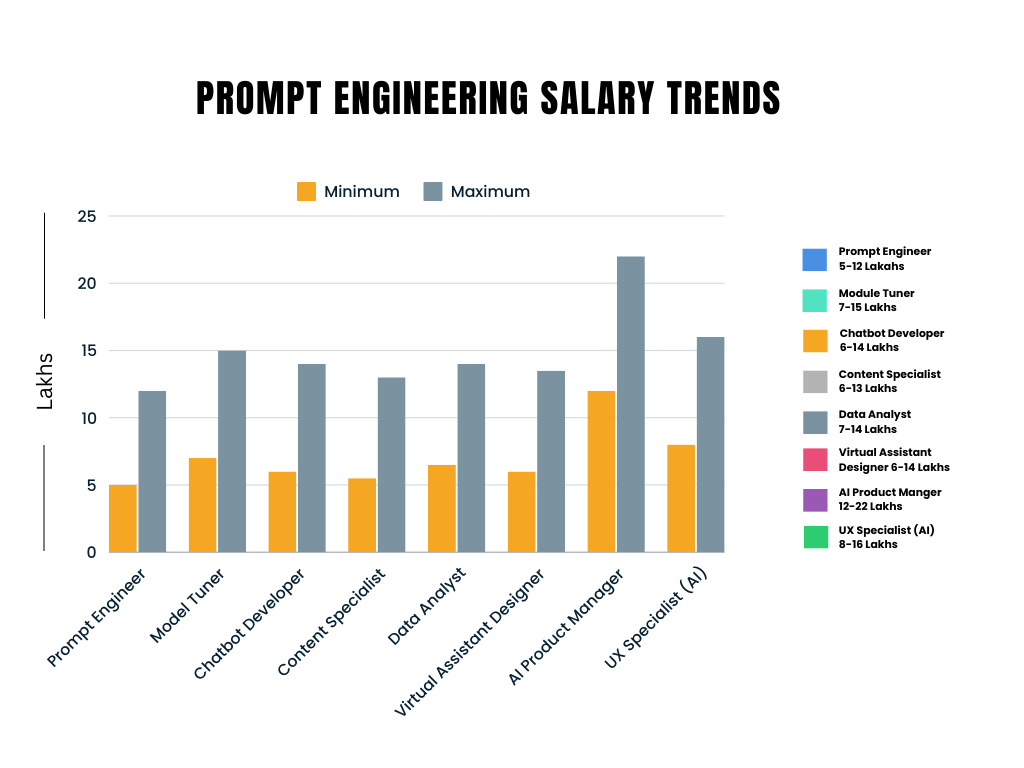

Prompt Engineering Salaries

Salary Overview by Designation

01

Prompt Engineer: Earns between INR 5,00,000 to INR 12,00,000 per year.

02

AI Language Model Tuner: Earns between INR 7,00,000 to INR 15,00,000 per year.

03

Chatbot Developer: Earns between INR 6,00,000 to INR 14,00,000 per year.

04

Content Generation Specialist: Earns between INR 5,50,000 to INR 13,00,000 per year.

05

Data Analyst with Prompt Engineering Skills: Earns between INR 6,50,000 to INR 14,00,000 per year.

06

Virtual Assistant Designer: Earns between INR 6,00,000 to INR 13,50,000 per year.

07

AI Product Manager: Earns between INR 12,00,000 to INR 22,00,000 per year.

08

User Experience (UX) Specialist with AI Focus: Earns between INR 8,00,000 to INR 16,00,000 per year.

The Prompt Engineering Salaries which we are discussed above are some times below the expectation and sometimes above the expectations that was mostly depending on your skills and experience in prompt engineering and AI.

Prompt Engineering Training & Certification

The Prompt Engineering Certification offered by Brolly Ai is very Important in today’s rapidly changing tech environment. This certification not only validates an individual’s proficiency in creating accurate instructions for Ai systems but also indicates their ability to optimize and enhance the performance of language models. As the demand for Ai applications continues to grow, organizations seek professionals with specialized skills in prompt engineering to ensure effective communication between humans and Ai systems.

Testimonials

Sara Patel

@sarapatel

Raj Kumar

@raj kumar

Ananya Gupta

@ananyagupta

Amit Singh

@amitsingh

The Brolly Ai Prompt Engineering course is a career path . I went in with zero coding skills, and now I’m confidently creating prompts for Ai models. The hands-on projects were a game-changer. they also offer Generative Ai and Machine learning courses.Thank you Brolly AI for your wonderful support.

Priya Sharma

@priyasharma

Nikhil Verma

@nikhilverma

Prompt Engineering Course in Hyderabad

Benefits

Enhanced Communication with Ai

Prompt engineering training prepares individuals with the skills to effectively communicate with Ai systems, supporting them to create exact instructions and receive desired outcomes.

Career Advancement

Acquiring prompt engineering skills opens doors to various job roles in AI development, chatbot design, content creation, and more, providing more career opportunities in the expanding field of artificial intelligence.

Improved Problem-Solving

Training in prompt engineering develops problem-solving skills as individuals learn to optimize prompts, improving the efficiency and accuracy of Ai models in generating specific outputs.

Increased Employability

In a technology focused job market, prompt engineering certification improves employability, making individuals stand out to employers seeking professionals with expertise in adjusting Ai systems for optimal performance.

Versatility in AI Applications

Individuals with prompt engineering training gain adaptability in applying AI across different domains, from natural language processing to data analysis, making them valuable assets in various industries.

Personalized User Experiences

Learning prompt engineering allows professionals to create personalized user experiences in Ai applications, ensuring that human-Ai interactions are personalised to specific needs and preferences.

Prompt Engineering Course Market Trend

01

Companies are increasingly investing in prompt engineering to improve the accuracy and relevance of Ai-generated content.

02

The market trend shows a growing demand for professionals skilled in adjusting language models through effective prompt design.

03

As chatbots become more common, prompt engineering is gaining importance for creating conversational and user-friendly Ai interactions.

04

Prompt engineering is developing as a key factor in improving the clarity and control of complex Ai models.

05

Job postings for prompt engineering roles have seen a remarkable increase, indicating a rising demand for specialists in the field.

06

The trend reflects a shift towards more personalized Ai applications, motivating the need for experts who can optimize prompts for specific user contexts.

07

Organizations are recognizing the value of prompt engineering in content creation, data analysis, and other Ai-driven processes, inspiring its market growth.

08

Keeping up with prompt engineering techniques is important because AI technology keeps getting better. It helps us stay in adjust with the changes happening in artificial intelligence.

Frequently Asked Questions

Prompt Engineering focuses on training individuals to effectively use language models like GPT-3 for various applications. Unlike traditional programming courses, it emphasizes generating human-like text based on prompts.

Brolly Ai is renowned for its expertise in artificial intelligence and offers a comprehensive curriculum, experienced instructors, and hands-on projects to ensure a robust learning experience.

Yes, the course is designed to accommodate learners of all backgrounds. Basic computer literacy is recommended, but no prior programming experience is necessary.

The duration of the course is for 3 months for Prompt Engineering offered by Brolly Ai.

The course includes hands-on projects that cover diverse applications such as chatbot development, content generation, and natural language understanding, allowing you to apply prompt engineering skills in real-world scenarios.

Yes, Brolly Ai Institute provides post-course support and may offer job placement assistance to help students transition into the workforce.

Yes, Brolly Ai provide online access to course materials and recorded lectures, ensuring flexibility for students to review content at their own pace

While no specific prerequisites are required, having a basic understanding of machine learning concepts and programming languages like Python can be beneficial.

Brolly Ai offers alumni resources, newsletters, or community forums where you can stay connected with fellow learners and stay informed about industry updates.

Students can explore diverse career paths, including roles in natural language processing, Ai research, chatbot development, content creation, and more. The skills gained are versatile and applicable across various industries.

Other Relevant Courses

MLOPS

Generative AI

Machine Learning

Prompt Engineering Course in Hyderabad #1 Best Training Online

Brolly AI is the leading Training institute that offers prompt engineering course in Hyderabad with Placement Assistance. Best Online Training with Certification

Course Provider: Organization

Course Provider Name: Brolly AI

Course Provider URL: https://brollyai.com/

Course Mode: Onsite

Duration: 30:00:00

Repeat Count: 2

Repeat Frequency: Daily

Course Type: Paid

5